Greetings and welcome to another episode of The Road To AI. This episode is about failures, and why they are extremely helpful for the development of ones mind.

So about two months have passed since my last post. Mostly because life has a way of preventing us from doing some actual work, And also because I FAILED. I FAILED so hard I didn’t even know how to write about it. I spent most of my time trying to build several programs to try to show off how beautiful genetic algorithms work, yet to no avail.

What Exactly Is The Genetic Algorithm?

Well, ever heard about a cool dude called Charles Darwin? Darwinism? the Race Theory? Evolution? Survival of the fittest? any of that?

If you haven’t – GO READ ABOUT IT!

If you have, and decided not to believe in it… Well I always say you can never be 100% sure about anything really. Still, accept that I believe that you are probably STUPID!

In short – We all have our very own unique DNA – A “document” withholding all of the properties, or genes, that make us ourselves. Both physically and mentally. This DNA is shuffled and mixed with another’s DNA to create a whole new version comprised of the random picking of properties from both DNAs. We call this new human version a “baby”. And we call the act of mixing and shuffling of genes… well, ask your parents if you don’t know.

Anywho, nature compels us to find suitable mates in order to produce a better, more adept version of ourselves. The outer world presents challenges such as weather, lack of natural resources, or stupidity, and our only way of overcoming them really is just producing a more adept subject. This is how humans evolved throughout millions of years from a small ameba of a father.

So how do we mimic the natural selection in a manner of solving real world problems?

The Laws Of Artificial Genetic Algorithms

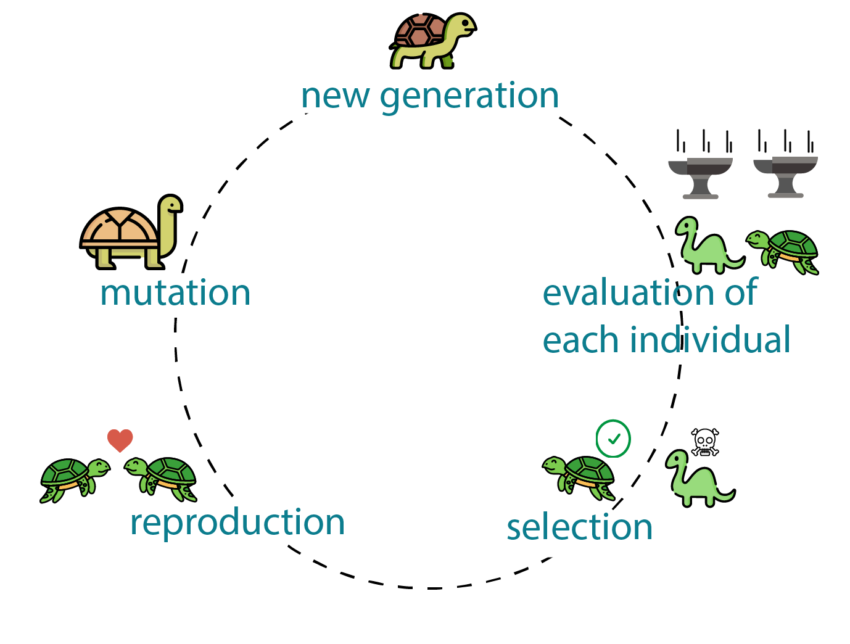

Just as in all great things, building an artificial problem solver using genetic algorithms, requires following simple rules.

- Create a population of sort.

* Each item of the population should have its own unique DNA.

* The population should be diverse and random, at first. - Selection: Set up a fitness function to separate the strongest from the weakest.

- Crossover: Mix and match the genes to create a new population.

The genes from the DNA with the highest fitness, should have a higher probability of moving forward into the new population. - Mutation: The genes should have a certain small probability to mutate, bring something new to the population. This ensures you wont get stuck with a “mediocre” solution/

The above present optimization knobs for a better performance. That is actually the hard part.

Population size –

The more the better? I don’t think so. More items in play, the more brain power you will need move all of them around.

You also need to consider the fitness function. with a big population you cant allow yourself to choose a proportional/linear function such as the following: fitness = constant * time_counted_until_death_of_item

Why? because when you assign probabilities for selection, the probability distribution becomes more evenly spread, and thus, for example, the best item gets a chance of 0.1% to be selected to move on, while the rest of the losers get 0.09% which isn’t that far from the former.

one way to get around it is using the “Exponential E” which looks like this:

fitness = e ^ (time_counted_until_death_of_item)

This means the fitness of the best is significantly higher then the loser’s one.

There are other known methods such as the soft minimax optimization taken straight out of decision theory textbooks.

One more issue is the randomization of the values. You want the population to be as diverse as you can, unless you have prior information which moves you to initially give more of a specific attribute(gene) to others.

Mutation –

Without mutation you might find yourself in a “stalemate”. The population could lean towards an unwanted local minimum, where our goal is to reach the total or a certain other minimum of our loss function. The mutation is the quantum tunneling you require to shift the population to a better direction.

The knob is the chance to mutate, and by how much. You can assign random values to the poor mutated souls, or you can just give them a nudge in a certain direction, like using a random gaussian with a specific small standard deviation.

Crossover –

Now that’s a world of opportunities on its own. How will you decide on the genes that move onto the child? Well, one way is to take the best three to create a new population out of, sifting them through the mutilation function to allow diversity. The obvious one is, of course, to use the assigned probabilities (from the fitness function) to choose two and create a new child with half of either chosen partners.

TIME –

The efficiency of the model is measured by the time it takes to reach convergence to the optimal solution.

Of course, if you do not converge into the optimal solution, then you FAILED! or just didn’t wait long enough for the mutation to get you out of the local minimum.

I Hereby Present My Failed Attempts

1. I tried to lunch a “satellite” into orbit:

2. I created a natural environment with predators and agents. each agent has one of three attributes at random strength mapped to its color. The attributes are the separation, cohesion and alignment factors as you saw in my last post about autonomous flocking agents.